Open Source Terraform Module: CloudTrail Logs from S3 to Kinesis Data Streams

We are happy to provide an open-source Terraform module enabling automatic delivery of CloudTrail logs from S3 to a Kinesis Data Stream.

https://github.com/nexthink-oss/terraform-aws-cloudtrail-s3-to-kinesis/

Introduction

A primer on CloudTrail logs

When securing an AWS environment, CloudTrail logs are a must-have, as they record all actions taken against the AWS API in your AWS account(s). They are useful not only to detect threats, but also to understand permissions people use, and flag legitimate activity that may be harmful such as manually changing security groups in production environments. They are also particularly useful when debugging IAM issues.

It’s important to keep in mind that CloudTrail is only part of the equation, and we should not forget about VPC Flow Logs, DNS query logs, application logs, database logs, etc. Even when focusing on CloudTrail, some noisy events are disabled by default and must be explicitly enabled. This is the case for S3 object-level events (object read/write), Lambda function invocations and DynamoDB queries. Typically, it makes sense to enable S3 data events only for sensitive buckets, such as the ones used for backups as opposed to logs or static web assets.

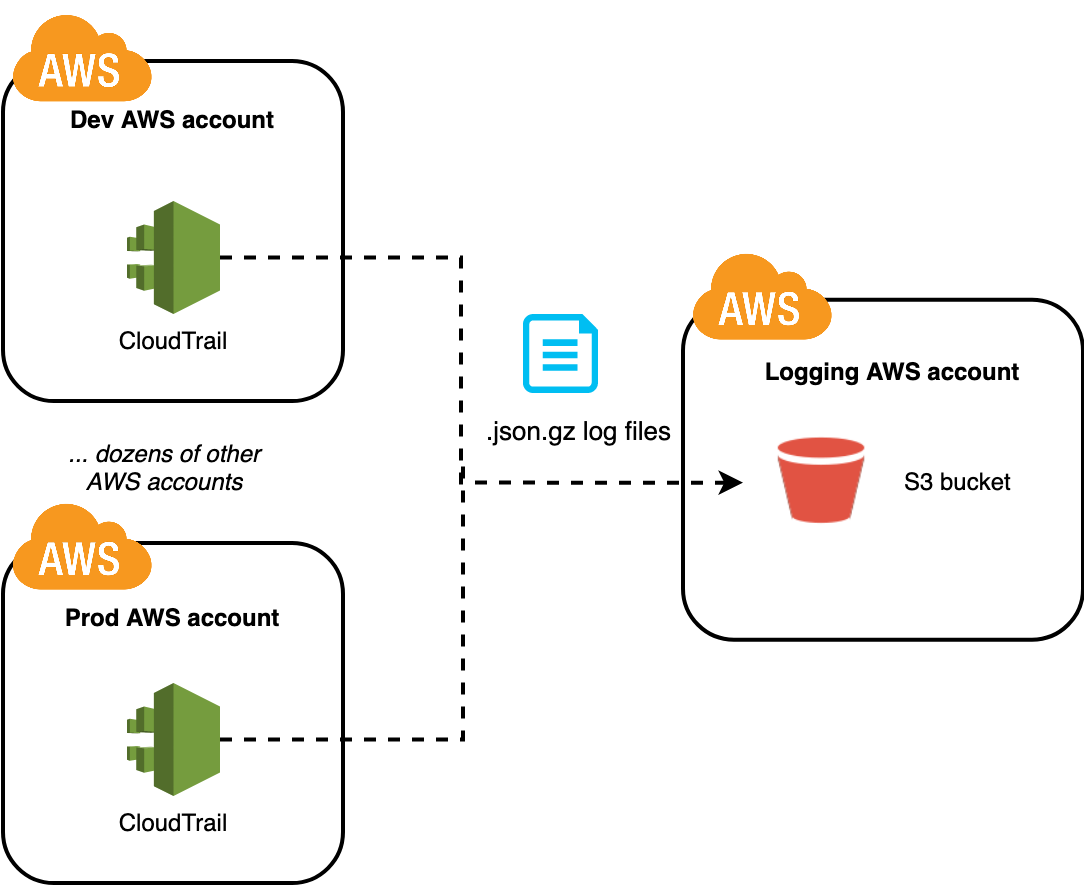

CloudTrail in a multi-account AWS environment

Nexthink leverages a multi-account AWS environment with over 50 AWS accounts for different business applications and environments. These accounts are managed through AWS Organizations. As it would be highly to enable and collect CloudTrail logs individually for each AWS account, we use CloudTrail in organization mode.

In our AWS Management Account, we create a CloudTrail organization trail using Terraform:

resource “aws_cloudtrail” “organization-trail” {

name = “organization-trail”

is_organization_trail = true

is_multi_region_trail = true

s3_bucket_name = “nexthink-organization-trail”

s3_key_prefix = “organization-trail”

include_global_service_events = true # Mandatory for multi-region trails

…

}

This ensures that CloudTrail is automatically enabled for all current and future AWS accounts of our organization (in all regions) and delivers log files to a centralized S3 bucket living in a separate AWS account.

All current and future AWS accounts in the organization automatically send their CloudTrail logs to a centralized S3 bucket.

Centralizing CloudTrail logs helps us ensure that the logs can be easily queried from a central location, and that an attacker gaining administrator access to an AWS account is not able to tamper with them (since they are not stored in the account).

My CloudTrail logs are in S3; now what?

Once CloudTrail logs are in S3, they are typically either shipped to third-party services (Splunk, Datadog, New Relic, Graylog…), or queried directly from S3 using Amazon Athena with a SQL-like language.

For real-time processing and aggregation, it is also useful to have the ability to send CloudTrail logs stored in S3 to a Kinesis Data Stream.

Amazon Kinesis Data Streams (KDS) is a massively scalable and durable real-time data streaming service. KDS can continuously capture gigabytes of data per second from hundreds of thousands of sources such as website clickstreams, […], logs, and location-tracking events. The data collected is available in milliseconds to enable real-time analytics use cases such as real-time dashboards, real-time anomaly detection, dynamic pricing, and more.

https://aws.amazon.com/kinesis/data-streams/

Since no out-of-the-box integration exists to send CloudTrail logs stored in S3 to a Kinesis Data Stream (referred to below as “Kinesis stream”), we developed our own integration and open-sourced it to allow the community to benefit from it. In our context, we needed our CloudTrail logs to end up in a Kinesis stream so that our managed detection & response partner could consume and aggregate them.

Shipping CloudTrail logs to a Kinesis stream

The problem

If you recall the beginning of this post, the CloudTrail delivery service automatically uploads CloudTrail log files (compressed JSON files) to our designated S3 bucket.

Example of a CloudTrail log file delivered by AWS to our S3 bucket. Note that a single log file can contain from one to thousands of individual CloudTrail events.

Consequently, we need a way to implement the following logic:

- Every time a new log file is uploaded to S3

- Decompress the log file on the fly

- Write it to the Kinesis stream

We also need to take a few constraints into account (no pun intended):

- A single CloudTrail log file can contain from one to thousands of CloudTrail events

- A single message sent to Kinesis cannot be larger than 1 MB

- When publishing messages to Kinesis by batches (using PutRecords), the overall request cannot be larger than 10 MB

Due to these constraints, we cannot assume that one CloudTrail log file will produce one Kinesis message; we need to chunk CloudTrail events before publishing them to the Kinesis stream.

The solution

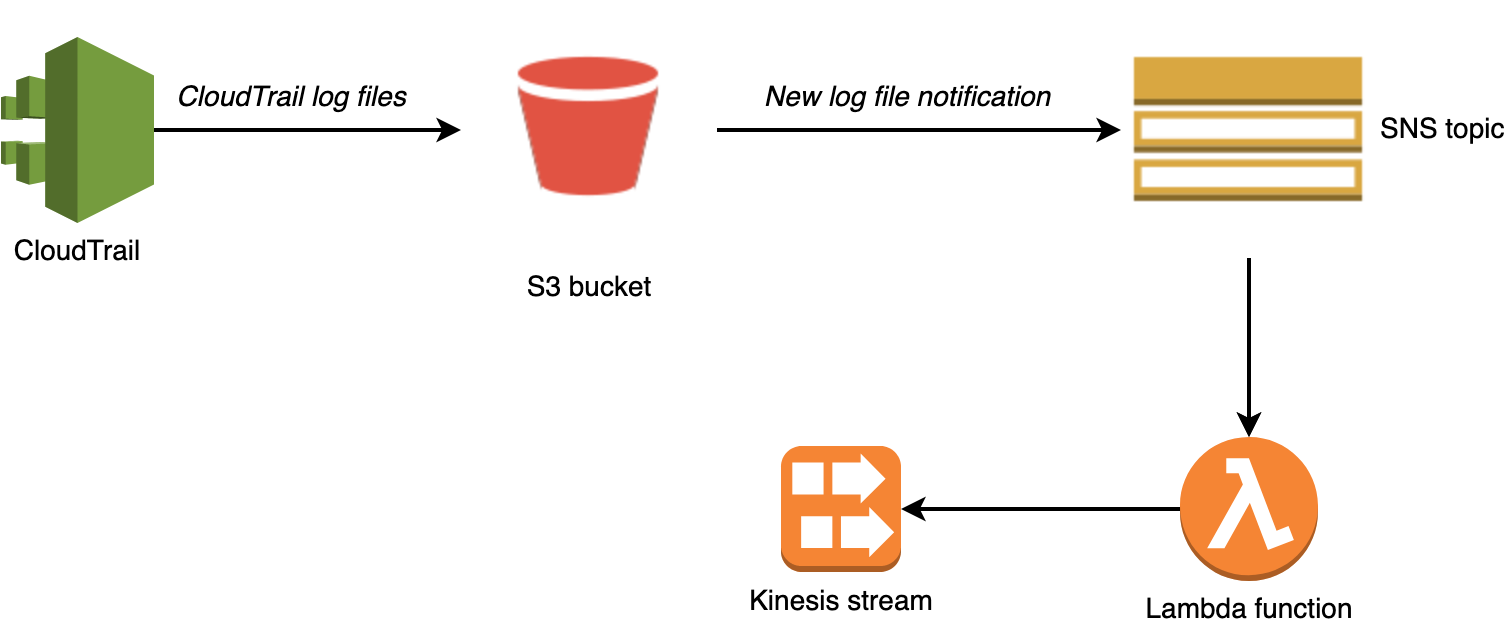

We use the following services and components to implement the solution.

Solution architecture; from CloudTrail to a Kinesis stream

- We configure an S3 bucket notification to send a notification to a SNS topic every time a new CloudTrail log file is delivered.

- The Lambda function is subscribed to the SNS topic and runs automatically for every new log file.

- The Lambda function chunks CloudTrail events as needed and publishes them in the target Kinesis stream.

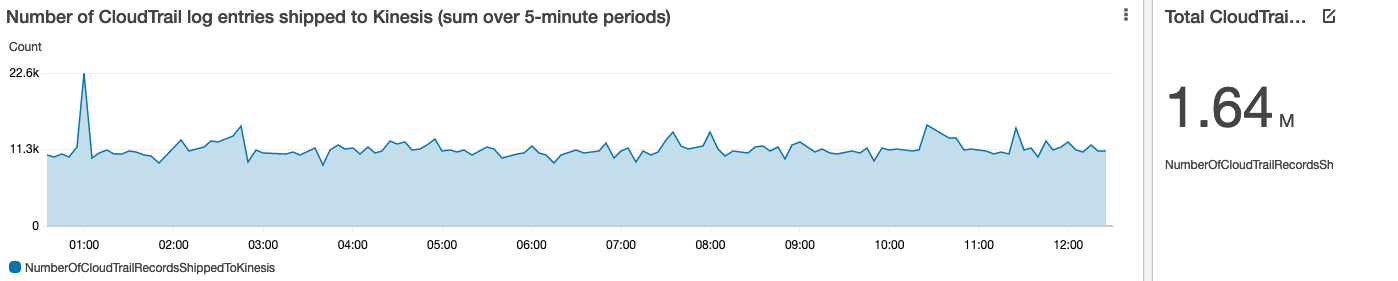

For the Lambda function publishes a custom CloudWatch metric allowing to visualize the volume of CloudTrail events processed (that’s CloudTrail events – not CloudTrail log files – as a reminder, a single CloudTrail log file can contain from one to thousands of events).

Visualizing the volume of CloudTrail events processed by the Lambda function

Open sourcing a reusable Terraform module

At Nexthink, we make a heavy use of Infrastructure-as-code, and more specifically Terraform. We implemented the architecture described above as a reusable Terraform module, that we’re happy to open source today at https://github.com/nexthink/terraform-aws-cloudtrail-s3-to-kinesis.

You can easily instantiate the module, point it to the S3 bucket holding your CloudTrail logs, and watch them flow in a Kinesis stream! Check out our complete example.

Testing the solution

“Infrastructure-as-code” shouldn’t just be a buzzword. When we think about code, we think about tests, continuous integration, semantic versioning – these practices should therefore apply to infrastructure-as-code.

For this project, we leverage Terratest to write end-to-end tests. More specifically, the end-to-tests perform the following:

- Instrument Terraform to spin up real infrastructure (S3 bucket, SNS topic, Kinesis stream…)

- Upload a sample CloudTrail log file to the S3 bucket

- Ensure that a corresponding message is produced to the Kinesis stream and has the expected contents

- Instrument Terraform to destroy the infrastructure.

Running these tests gives us the assurance that the overall data flow is working end to end, and that no link in the chain is broken. They take around 2 minutes to run.

Cost

The two main cost factors are the Lambda function and the Kinesis stream. The Lambda function is billed per second of execution and memory size. The Kinesis stream is billed per shard hour, data retained, and ingress/egress data transfer.

We use a configuration with 16 shards and a 7-day retention period. Our Lambda processes around 4M CloudTrail events per day, in around 70k invocations. With this setup, the cost per day roughly amounts to:

- $16 for the Kinesis stream. Half of it is for the 7-day retention period, half of it for shard-hours.

- Less than $0.01 for the Lambda function

Conclusion

This Terraform module is released under the BSD 3-clause license, meaning it can be reused, including for commercial purposes. We aim at increasing our contributions to the open-source community and expect to release further projects showcasing our journey towards a cloud-native architecture very soon!

We appreciate feedback and bug reports through Github issues or e-mail at [email protected].

Related posts:

- Your MS Teams Rollout Needs Specific Help—Not General Guidance

- What Your IT Chatbot Can Look Like Running on Full Power

- 8 Websites Every End-User Computing Professional Needs to be Visiting Daily

- Paul Hardy (ServiceNow): The Changing Role of IT